LLMs are trained on only 5% of the Internet — here's why that matters

Most people think Large Language Models (LLMs) like ChatGPT or Claude know everything on the internet. They don’t. In fact, they only know the surface — literally.

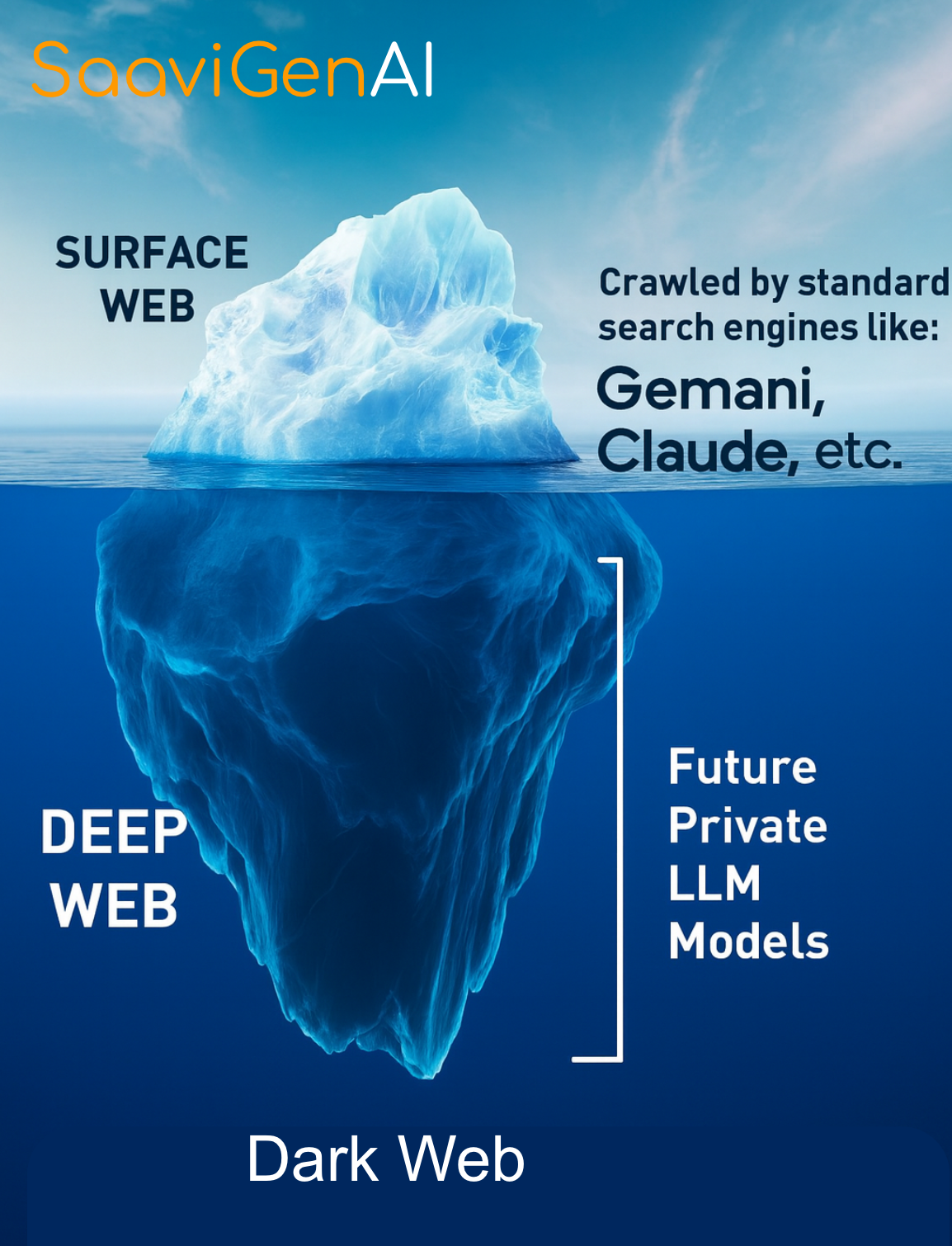

The Internet is an iceberg

The World Wide Web is like an iceberg. What we browse daily — the visible layer — is only a small fraction of what truly exists online. It’s divided into three distinct zones:

Surface Web (≈ 5%)

Everything that’s open, indexed, and searchable through search engines. This includes Wikipedia, blogs, open news sites, GitHub, and public forums.

Deep Web (≈ 90–95%)

The massive portion hidden behind logins, paywalls, or restricted networks — research papers, academic databases, corporate intranets, legal filings, financial systems, and private cloud data.

Dark Web (tiny, but opaque)

Encrypted networks like .onion domains — anonymous, unindexed, and intentionally isolated for privacy or secrecy.

So while it feels like the internet holds infinite information, the “open web” that search engines (and public LLMs) can actually reach is just the tip of the digital iceberg.

What LLMs actually train on

LLMs are trained only on that ≈5% Surface Web — specifically the parts that are:

- Publicly available (no login or subscription required)

- Crawlable and indexable (allowed by robots.txt and open-access policies)

Even inside that 5%, many major platforms restrict crawlers or license their data separately. So while their content looks public, it’s often invisible to model training.

Bottom line: LLMs don’t learn from the entire internet — they learn from the accessible internet: what’s visible, open, and permitted to be read by machines.

Why private LLMs matter

If public LLMs float on the Surface Web, enterprise and private LLMs are built to dive deeper. General-purpose models (GPT, Gemini, Claude, etc.) are broad but shallow — they understand language, not your business. The real power comes when organizations train or fine-tune models with their internal data — information that never touches the open internet.

That hidden 95% becomes an advantage when applied correctly:

- Healthcare: Models trained on anonymized medical records for diagnosis support, drug discovery, and patient engagement — under compliance controls.

- Finance: Fine-tuned on transaction histories, fraud reports, and internal risk models for smarter risk analysis and detection.

- Manufacturing & Energy: Models trained on maintenance logs, sensor feeds, and field reports to enable predictive maintenance and operational intelligence.

These private models don’t compete with public LLMs — they extend them. Securely. Contextually. Purposefully.

The security imperative

Private LLMs are far more powerful than their public counterparts — and far more sensitive. They hold confidential, proprietary, and often irreplaceable data, such as:

- Corporate strategy documents and intellectual property

- Patient health records and diagnostic data

- Financial transactions, risk models, and regulatory reports

That’s why AI security is not optional — it’s existential. Every layer from training pipelines to inference APIs is a potential attack surface. Prompt injection, data exfiltration, model inversion, insider misuse — these are not hypothetical; they are happening now.

Building secure AI means embedding cybersecurity from the foundation — not bolting it on later. Protecting data integrity, provenance, and access is survival, not a checkbox.

Conclusion

The future of AI won’t come from a single giant model that knows everything. It will come from thousands of specialized, domain-trained models that know something deeply and safely. Public LLMs provide language understanding. Private LLMs provide contextual, high-value intelligence. LLMs will redefine intelligence. Cybersecurity will decide who survives it.